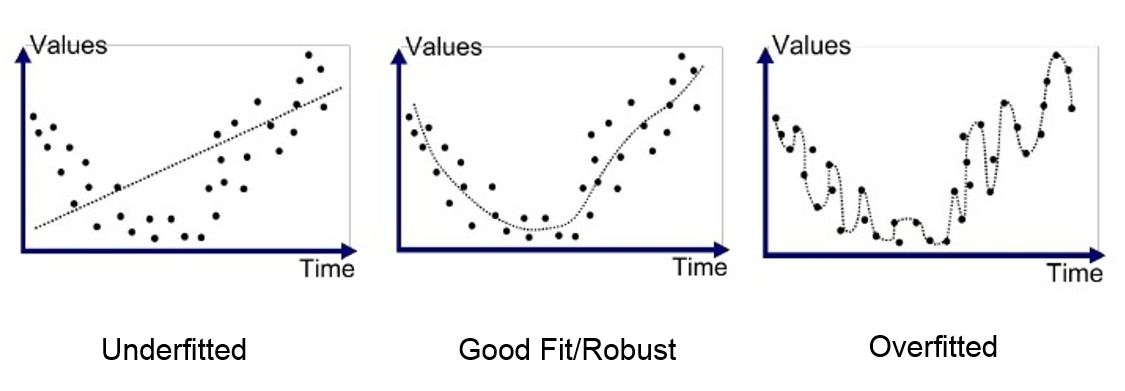

Ensuring Model Quality: Overfitting, Underfitting, and Good Fit in ML Testing

_______Sakthivel MurugesanIn software testing, ensuring that machine learning models make accurate and generalized predictions is essential for building reliable applications. Here’s how testers can address overfitting, underfitting, and achieve a good fit for high-quality models:

Overfitting in ML Models

This occurs when a model excels on training data but fails on unseen data, capturing noise instead of true patterns.

Testing Signs:

- Cross-validation: Use k-fold validation to evaluate performance across multiple test sets. High variance often indicates overfitting.

- Performance Discrepancy: Compare metrics (accuracy, precision) between training and test datasets. A significant drop in test accuracy suggests overfitting.

- Boundary Testing: Assess the model's responses to edge cases to see if it relies too heavily on specific patterns.

Underfitting in ML Models

This happens when a model is too simplistic, leading to poor performance on both training and test datasets.

Testing Signs:

- Consistency Across Datasets: Low performance metrics on both training and test data indicate underfitting.

- Pattern Recognition Testing: Introduce structured data and check if the model fails to recognize patterns.

- Incremental Data Testing: Run tests with additional relevant features to see if accuracy improves.

Good Fit in ML Models

A model that performs well across both training and test datasets demonstrates good generalization.

Testing Signs:

- Validation Testing: Run multiple validation sets for consistent performance.

- A/B Testing: In production, use A/B tests on live data to verify model performance.

- Bias & Variance Testing: Measure bias (low training performance) and variance (high test error) to confirm fit.

From a software testing perspective, understanding and testing for overfitting, underfitting, and a good fit ensures that machine learning models not only perform accurately but are also robust, adaptable, and ready for real-world deployment.

.png)